The Race for Data: Inside the Explosive Growth of the Data Center Industry

Source: TRG Datacenters

Introduction

Tech companies are spending unprecedented sums of money on artificial intelligence research and development – but their leaders don’t think it is enough. Executives at the companies on the front lines of AI development, such as Microsoft, Amazon, Facebook, and Meta (often referred to as the “hyperscalers”), claim that the demand for computing power is increasing so rapidly that they have been unable to keep up. Our world today is simply not equipped with sufficient infrastructure to build, train, and implement AI systems on a large scale. The hyperscalers are trying to change that.

In order to train an AI model, like ChatGPT, you need enormous amounts of compute power. Thousands of the world’s most powerful computers are running 24/7 with minimal human interference. These computers live in data centers all over the world, along with the networking systems, cooling infrastructure, and power infrastructure necessary to run them. The world is demanding more computing power, and there are not enough data centers to satisfy that demand. McKinsey & Co projects that global spending on data centers will reach nearly $7 trillion by 2030. The next industrial revolution will not be driven by steel or oil – it will be driven by data.

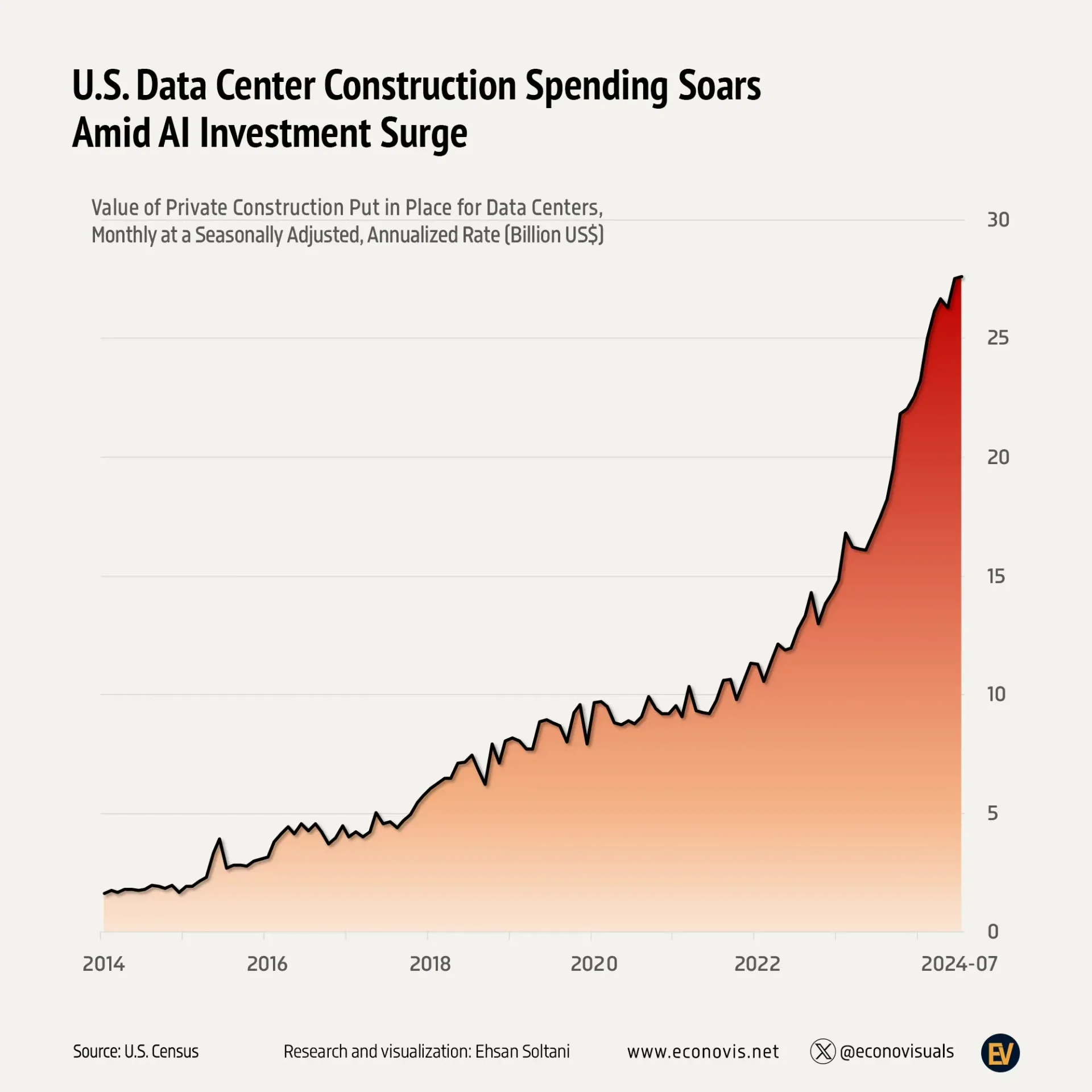

Source: US Census

The Boom

Data centers used to primarily be shared facilities. Internet service companies like Equinix own and operate buildings and rent out space within them for companies to run their servers. These data centers still exist; however, the hyperscalers have begun building their own massive data centers around the world to expedite AI research and development. Data centers currently average around 30k square feet, but these new projects dwarf the old conception of the facilities. OpenAI’s Stargate Project, for example, just announced the construction of its fourth site, a $7 billion, 2.2 million square foot data center in Saline, Michigan. Elon Musk and xAI built “the biggest supercomputer in the world” in Memphis, Tennessee, named Colossus. Colossus currently houses 200k GPUs, with a goal of 1 million.

The AI boom has companies erecting data centers all over the world, but some locations have emerged as hot spots. Places like Northern Virginia, Phoenix, Dublin, and Singapore are favored due to geographical features like reliable power, fiber connectivity, and a regulatory climate. By the end of 2023, data centers in Northern Virginia, nicknamed Data Center Alley, consumed enough electricity to power 800k homes.

Source: NREL

This explosive growth, however, comes with mounting consequences – both for the companies racing to build and for the communities that host them. Power grids, water systems, and local governments are struggling to keep up. Bain projects that, by 2030, data centers could consume 9% of the country’s total electricity. The US currently does not have the energy infrastructure to support this demand, and the environment is suffering the consequences.

The Backlash

Similar to the Industrial Revolution of the 19th century, this data center boom has had dramatic environmental and local repercussions. Each ChatGPT search uses 10x more electricity than a Google search. Forbes reports that the water consumption required for an average ChatGPT conversation is equal to a standard water bottle. As AI continues to scale at an unprecedented pace, detrimental effects will only be amplified. These concerns are garnering more national attention; however, there does not seem to be an immediate solution.

Our national power grid is struggling greatly to accommodate the data center boom. The grid was designed decades ago to bring power to residential and dispersed industrial communities – not to meet the sheer magnitude and concentration of demand we are seeing right now. Combine this with the fact that data center construction happens much faster than upgrading the grid, and it becomes apparent that energy is placing a constraint on AI development in the US. Hopefully, with more thoughtful data center placement, grid-friendly energy consumption, and a focus on renewable energy sources, we can reduce the load until the grid can catch up.

Since computers in data centers are prone to overheating, they consume massive amounts of water for cooling purposes. Loudon County, the home of Data Center Alley, consumed nearly 2 billion gallons of water in 2023, a 63% increase from 2019. To make matters worse, the majority of data centers are located in regions with high levels of water stress. Local governments are fighting back due to the fear of water cost increases and supply risk for local residents. Companies are being forced to search for more efficient and sustainable cooling solutions to mitigate their environmental footprint.

The Bubble?

Naturally, all of this spending and disruption has people and markets concerned. Are we overbuilding the future? Could this be a repeat of the fiber optic flop of the ‘90s, when only 5% of the infrastructure built for anticipated internet growth was in use by 2001? This seems different.

As opposed to the army of startups that propelled the Dot Com bubble, this revolution is being pioneered by the largest companies in the world. With the cautionary tale of the early 2000s in mind, the brightest minds in the world have continued to advocate for the expansion of AI research and development. If demand for compute power continues to increase seemingly exponentially, the hyperscalers have no choice but to continue building out data center infrastructure. However, we must approach this growth through the lens of sustainability and caution. The race for data is transforming our physical and digital landscapes alike – but if we fail to match innovation with foresight, the very infrastructure driving the next industrial revolution could become its greatest limitation.